1. Caddy version (caddy version):

v2.4.0-beta.2 h1:DUaK4qtL3T0/gAm0fVVkHgcMN04r4zGpfPUZWHRR8QU=

2. How I run Caddy:

a. System environment:

Ubuntu 20.04.1 LTS

b. Command:

sudo systemctl restart caddy

c. Service/unit/compose file:

none

d. My complete Caddyfile or JSON config:

{

on_demand_tls {

ask https://my.hypershapes.com/validate

interval 2m

burst 10

}

}

(root) {

root * /var/www/{args.0}

}

(baseSetup) {

file_server

php_fastcgi unix//run/php/php7.4-fpm.sock

encode gzip zstd

header {

Strict-Transport-Security "max-age=31536000; includeSubDomains; preload; always"

X-Frame-Options "SAMEORIGIN"

X-XSS-Protection "1; mode=block"

X-Content-Type-Options "nosniff"

}

@static {

file

path *.ico *.css *.js *.gif *.jpg *.jpeg *.png *.svg *.woff *.woff2 *.json

}

header @static Cache-Control max-age=5184000

log {

output file /var/log/caddy/access.log {

roll_size 2MiB

roll_keep 100

roll_keep_for 1440h

}

}

}

my.hypershapes.com {

import root hypershapes/public

import baseSetup

}

affiliate.hypershapes.com {

import root hypershapes/public

import baseSetup

}

admin.hypershapes.com {

import root hypershapes-master-admin/dist

import baseSetup

}

*.hypershapes.com {

import root hypershapes/public

import baseSetup

tls {

dns cloudflare {REDACTED}

}

}

https:// {

import root hypershapes/public

import baseSetup

tls {

on_demand

}

}

3. The problem I’m having:

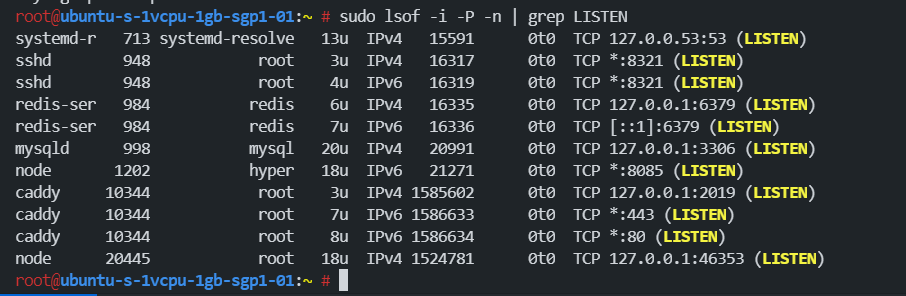

All my websites hosted with Caddy web server not responding, although the status of caddy service shows active (running).

Status of caddy when websites not responding:

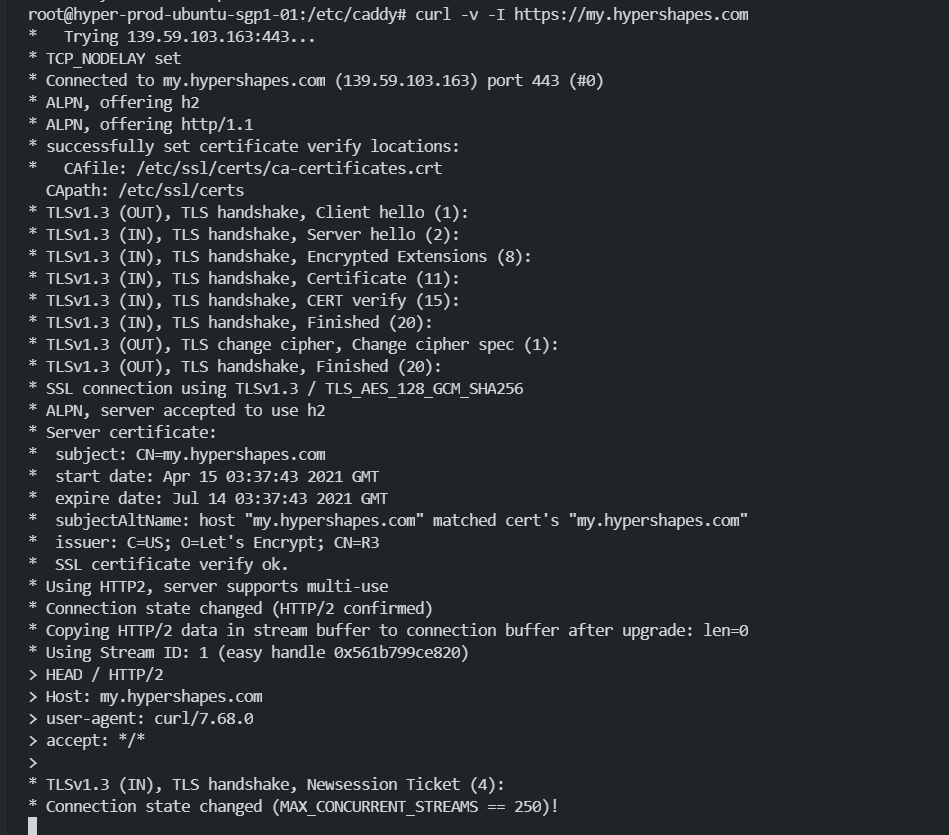

The result of curl -v -I of one of my website:

No responses are returned after long wait.

This issue happens in my production server only, and never happen in my staging server, even their Caddyfile configurations are the same. This usually happens once per week or few times a day. And so far only can be solved by running

sudo systemctl restart caddy

4. Error messages and/or full log output:

Journalctl log:

I found this error message keep appear in my log, is it related to the problem I faced?

May 12 07:15:56 hyper-prod-ubuntu-sgp1-01 caddy[25352]: {"level":"error","ts":1620803756.6630805,"logger":"http.handlers.reverse_proxy","msg":"aborting with incomplete response","error":"http: wrote more than the declared Content-Length"}

5. What I already tried:

sudo systemctl restart caddy