1. Output of caddy version:

caddy version

v2.6.2 h1:wKoFIxpmOJLGl3QXoo6PNbYvGW4xLEgo32GPBEjWL8o=

2. How I run Caddy:

i installed caddy on an aws ec2 instance ubuntu using exatly what was said in this link

a. System environment:

Ubuntu 22.04.1 LTS (GNU/Linux 5.15.0-1022-aws x86_64)

b. Command:

sudo systemctl restart caddy

c. Service/unit/compose file:

Paste full file contents here.

Make sure backticks stay on their own lines,

and the post looks nice in the preview pane. -->

d. My complete Caddy config:

testlave.live {

root * /var/www/html/public

encode zstd gzip

file_server

php_fastcgi unix//var/run/php/php8.1-fpm.sock

}

3. The problem I’m having:

So im migrating from digital ocean to aws.

In my previous digitalocean setup, Francis helped me with this template which we used

load balancer config

{

on_demand_tls {

ask https://lave.live/domain/verify

}

}

(proxy) {

reverse_proxy backend-1:80 backend-2:80 {

# whatever load balancing config

}

}

lave.live {

import proxy

}

https:// {

tls {

on_demand

}

import proxy

}

www.lave.live {

redir https://lave.live{uri}

}

//backend

Backend:

http:// {

root * /home/forge/lave.live/public

encode zstd gzip

php_fastcgi unix//var/run/php/php8.1-fpm.sock {

# so that X-Forwarded-* headers are trusted

trusted_proxies private_ranges

}

file_server

}

Now we want to achieve the same thing with AWS

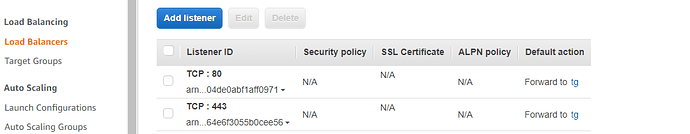

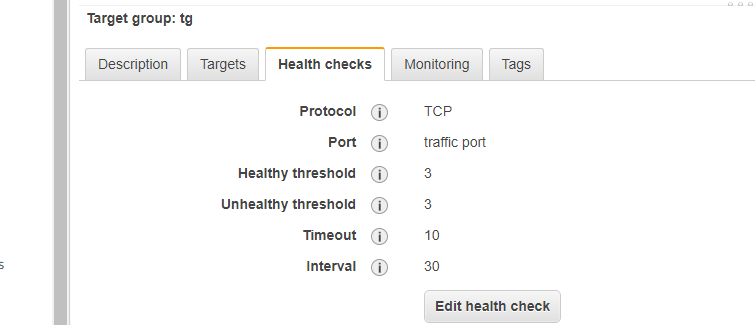

I have 2 instances on aws (autoscale) running caddy as my webserver on an ubuntu os. behind a network loadbalancer . When i use this caddy file

:80 {

root * /var/www/html/public

encode zstd gzip

file_server

php_fastcgi unix//var/run/php/php8.1-fpm.sock

}

my load balancer DNS name provided by aws (lb-454730932.eu-west-3.elb.amazonaws.com) can load by webapp (without ssl).

But When i try using my domain name in route 53 ( testlave.live) and point to this same loadbalancer, site doesn’t even load and i get “site cannot be reached” in my browser

below is the caddy file i used for the domain (testlave.live)

testlave.live {

root * /var/www/html/public

encode zstd gzip

file_server

php_fastcgi unix//var/run/php/php8.1-fpm.sock

}

4. Error messages and/or full log output:

Oct 19 23:18:57 ip-172-31-46-178 caddy[3383]: {"level":"info","ts":1666221537.8296325,"msg":"using provided configuration","config_file":"/etc/caddy/Caddyfile","config_adapter":""}

Oct 19 23:18:57 ip-172-31-46-178 caddy[3383]: {"level":"warn","ts":1666221537.8309634,"msg":"Caddyfile input is not formatted; run the 'caddy fmt' command to fix inconsistencies","adapter":"caddyfile","file":"/etc/caddy/Caddyfile","line":2}

Oct 19 23:18:57 ip-172-31-46-178 caddy[3383]: {"level":"info","ts":1666221537.8322792,"logger":"admin","msg":"admin endpoint started","address":"localhost:2019","enforce_origin":false,"origins":["//localhost:2019","//[::1]:2019","//127.0.0.1:2019"]}

Oct 19 23:18:57 ip-172-31-46-178 caddy[3383]: {"level":"info","ts":1666221537.8325477,"logger":"http","msg":"server is listening only on the HTTPS port but has no TLS connection policies; adding one to enable TLS","server_name":"srv0","https_port":443}

Oct 19 23:18:57 ip-172-31-46-178 caddy[3383]: {"level":"info","ts":1666221537.832694,"logger":"http","msg":"enabling automatic HTTP->HTTPS redirects","server_name":"srv0"}

Oct 19 23:18:57 ip-172-31-46-178 caddy[3383]: {"level":"info","ts":1666221537.8333313,"logger":"http","msg":"enabling HTTP/3 listener","addr":":443"}

Oct 19 23:18:57 ip-172-31-46-178 caddy[3383]: {"level":"info","ts":1666221537.8335338,"msg":"failed to sufficiently increase receive buffer size (was: 208 kiB, wanted: 2048 kiB, got: 416 kiB). See https://github.com/lucas-clemente/quic-go/wiki/UDP-Receive-Buffer-Size for details."}

Oct 19 23:18:57 ip-172-31-46-178 caddy[3383]: {"level":"info","ts":1666221537.8337584,"logger":"http.log","msg":"server running","name":"srv0","protocols":["h1","h2","h3"]}

Oct 19 23:18:57 ip-172-31-46-178 caddy[3383]: {"level":"info","ts":1666221537.8339226,"logger":"http.log","msg":"server running","name":"remaining_auto_https_redirects","protocols":["h1","h2","h3"]}

Oct 19 23:18:57 ip-172-31-46-178 caddy[3383]: {"level":"info","ts":1666221537.8340592,"logger":"http","msg":"enabling automatic TLS certificate management","domains":["testlave.live"]}

Oct 19 23:18:57 ip-172-31-46-178 caddy[3383]: {"level":"info","ts":1666221537.8351243,"msg":"autosaved config (load with --resume flag)","file":"/var/lib/caddy/.config/caddy/autosave.json"}

Oct 19 23:18:57 ip-172-31-46-178 systemd[1]: Started Caddy.

Oct 19 23:18:57 ip-172-31-46-178 caddy[3383]: {"level":"info","ts":1666221537.841637,"logger":"tls.cache.maintenance","msg":"started background certificate maintenance","cache":"0xc00011b2d0"}

Oct 19 23:18:57 ip-172-31-46-178 caddy[3383]: {"level":"info","ts":1666221537.8418572,"logger":"tls","msg":"cleaning storage unit","description":"FileStorage:/var/lib/caddy/.local/share/caddy"}

Oct 19 23:18:57 ip-172-31-46-178 caddy[3383]: {"level":"info","ts":1666221537.8429139,"logger":"tls","msg":"finished cleaning storage units"}

Oct 19 23:18:57 ip-172-31-46-178 caddy[3383]: {"level":"info","ts":1666221537.8435688,"msg":"serving initial configuration"}

5. What I already tried:

I also tried pointing my domain to the ip of one of my instances and of course it worked. but i don’t want one instance running my webapp which is why i need the load balancer

I tried all aws loadbalancer types, network, application classic etc

6. Links to relevant resources:

link to Francis support